Hello everyone, hope you're having a great New Year! :)

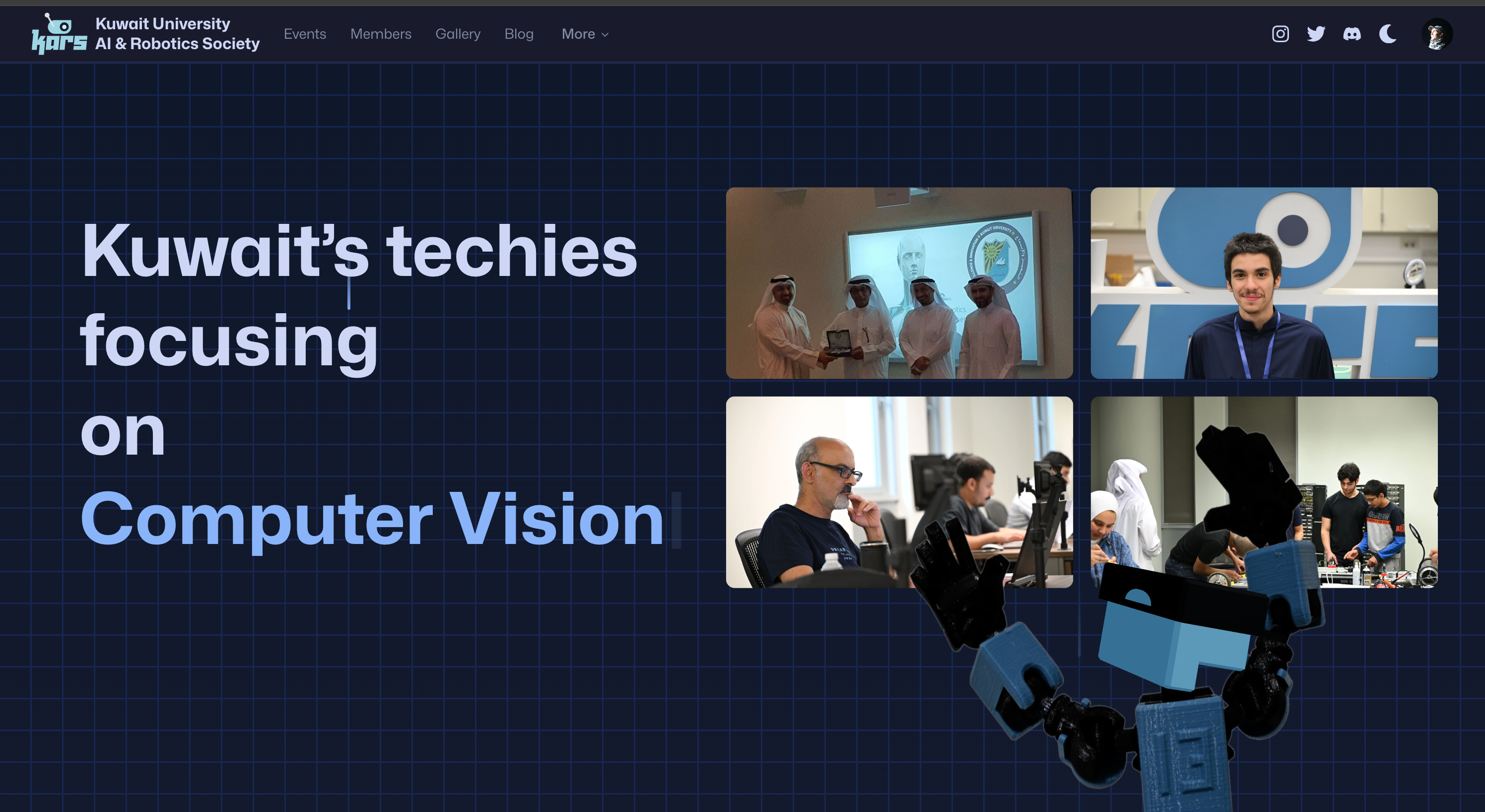

About a year ago, I had the idea of creating a database for Kuwait’s top engineers and robotics enthusiasts. Think LinkedIn, but specifically for like-minded individuals in Kuwait. Over the past year, I’ve been working on this project on and off, experimenting with tools and trying out new ones as they were released.

Since this is a fun project, I set out with the goal of exploring all the fancy new toys in the web-dev world, instead of relying on established tools that require extensive testing before production use. I’ve also used NextJS canary features before they were officially released. In this blog, I will talk about my experience and thoughts with these tools and the process of building this over-engineered website. If you're not interested in the technical nitty-gritty details, just take a tour around the website and enjoy. :)

Self-hosting

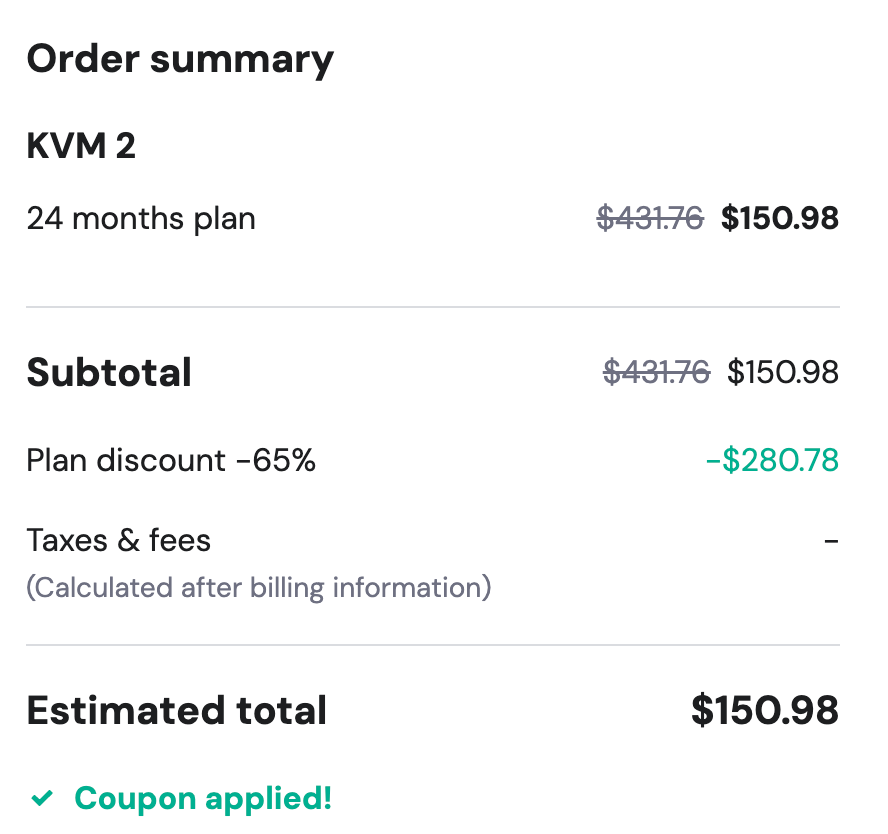

Since this is a non-profit society with minimal funding, we need to minimize our spendings as much as possible. One of the main goals of this project was to create a high-quality website for the society while keeping the cost low. Thankfully, we managed to do this by self-hosting everything. Our current bill for this project is 6.25/m (or $150/2 years) and a few dollars per year for the Cloudflare domain name.

VPS

Initially, we were hosting on Vercel, but decided to switch to avoid waking up to a huge bill. We also wanted to get our own analytics, notifications, image-transformations, uptime monitoring, and more — all for free.

We tried to rent a Hetzner VPS,l but were met with an account ban! This is especially infuriating since they required us to give personal information and government identification. We emailed their support but they couldn't disclose why they banned us, so naturally I assumed it was because they were racist :d

Instead, we opted for a Hostinger VPS in France.

Purchasing it took a couple of clicks and we were up and running in minutes. It also came with Coolify pre-installed (more on this later) so the whole process was great.

The specs are as follows:

- 2 vCPU cores

- 8 GB RAM

- 100 GB NVMe disk space

- 8 TB bandwidth

Although the server is too slow for my taste, it cost us $150 for two years, and is running most of our services so I can't really complain!

Database

I'm using Supabase for the PostgreSQL db and auth service. I figured the free tier would last a lifetime given that this website is for a small club. Auth emails are sent using Resend which is a breeze to work with. The entire app runs on Prisma and all requests are handled server-side.

I'm not using any RLS policies as I found them to hinder my workflow for each feature I implement. Instead, I'm doing validation in server actions before using Prisma directly.

Initially, I planned to self-host Supabase, but setting up auth and email templates was very cumbersome; you have to edit the .env file directly. I also had trouble getting it to work with Prisma's bgbouncer, so I decided to self-host later on.

One more con is that Supabase doesn't give you an easy way to migrate regions (at least on the free tier); I would have to create a new project and redo all the settings and auth setup. This is unfortunate since I chose Mumbai as the host region (closest to Kuwait) before buying a VPS in France. This made all queries take 2-3 seconds to make, as well as around 3 seconds for the server to resolve. This happens because the full trip for data to be delivered is user —> Cloudflare —> VPS (france) —> db (Mumbai) and round-trip back to the user. Hopefully this will improve once I get around to self-hosting Supabase.

Coolify

Coolify is a drop-in vercel replacement which made it super easy. Whenever I commit to main on github, it updates and redeploys my image with 0 down-time! It also gives me analytics, discord messages and image optimization with one click. Huge shout-out to Andras for this project.

Images

All images uploaded to the website are compressed and stored in a Cloudflare R2 bucket. Cloudflare auto-caches all images by default and serves them through their CDN. Any image uploaded is stored with it's resolution, and blurhashURL in the database.

I'm using next-image-tranformation which is a drop-in free replacement for vercel's costly image optimization service (Once again, shoutout to Andras!!). It resizes the image on the fly using IMGPROXY, making it possible to dynamically serve the best image size for your screen. This, along with a custom <Image> component, allow me to lazy-load and serve images super fast, while avoiding page-shift on image load.

Here's an example of what the blurhashes looks like:

The only issue with the image-transformation tool is that it seems to timeout when many images are being requested at the same time, causing images to fail to load. You can observe this behavior in karskwt.com/gallery where infinite-scrolling breaks when you scroll too quickly. This is actually the last documented bug I haven't managed to solve so I might have to switch back to normal images for the gallery.

Building the website

With all my projects now, I stick to the same stack when building my applications. I'm so used to them that I can build features instead of figuring out the inner workings of something new. With additions here and there, my workflow is usually the same with Nextjs + Prisma ORM + shadcn-ui.

Next.js 13 & the Turbopack Hurdle

I started building this project with Next.js 13 and the app router. With the new app router, NextJS became a joy to use. Server actions allow me to setup type-safe API endpoints QUICKLY, Prisma schema defines my entire database, Zod validations allow me to safely validate my data, and so on. It's really fun having your entire stack, both frontend and backend, be Typescript.

Throughout the development of this project, new versions were released and I tried upgrading as time went on. The experience was mostly smooth, but when Next.js 15 came out, I attempted to upgrade for the new features (hydration diffs) and performance improvements. Unfortunately, Turbopack, the new bundler introduced, caused numerous issues in my project. Compatibility problems with some dependencies and unstable builds led me to revert back to Next.js 14. Thankfully, I haven't missed out on much since I've been using React 19 canary features, such as form actions, since they were released.

If you haven't learned NextJS yet, you're missing out! I'm telling you, it made web-dev fun again.

Using nuqs for search

One of the things we tried to do was use nuqs instead of useState whenever possible.

It allows us to update the URL with search params within each keystroke. This lets you share your search query or current state easily, as all data is stored in the URL. An example of this is the event attendance form:

const [open, setOpen] = useQueryState("formOpen",

parseAsBoolean.withDefault(false),

);

const [attendanceCode, setAttendanceCode] = useQueryState("code",

{defaultValue: "",

});With this, we can share a QR code, which when scanned, sends you to the website with the form pre-opened and the code written out. With its simplicity, nuqs became a regular part of my workflow.

To Bun or not to Bun?

Going into this project, I really wanted to try out Bun. From the website:

Bun is a fast all-in-one JavaScript runtime

Bun, designed as a NodeJS drop-in replacement, offers numerous out-of-the-box features such as shell scripting, file operations, hashing, native JSX parsing, and bundling. They also just recently developed a first-class S3 buckets integration, which would've been helpful for our Cloudflare R2 usage.

All of these features would fit perfectly for this project, so I was really excited to try it out! However, I faced challenges with getting the cache to work, NextJS pages were constantly getting rebuilt on refresh. I will definitely visit Bun in the future.

Linters , formatters, and the amazing MillionJS tool

I was all-in on the Biome hype-train. A drop-in ESLint and Prettier which is many times faster sounds great. I started using it and everything was well. The only reason I dropped it, which might sound silly, is that TailwindCSS class sorting is not part of the formatter. This meant my TailwindCSS classes were not getting formatted on save. I know this might not seem like a good reason to stop using it, I am just too used to the order of TailwindCSS' sorted classes. I will wait for them to develop it further before trying it again (or maybe Bun will develop a linter/formatter tool?), for now I will stick to ESlint and Prettier.

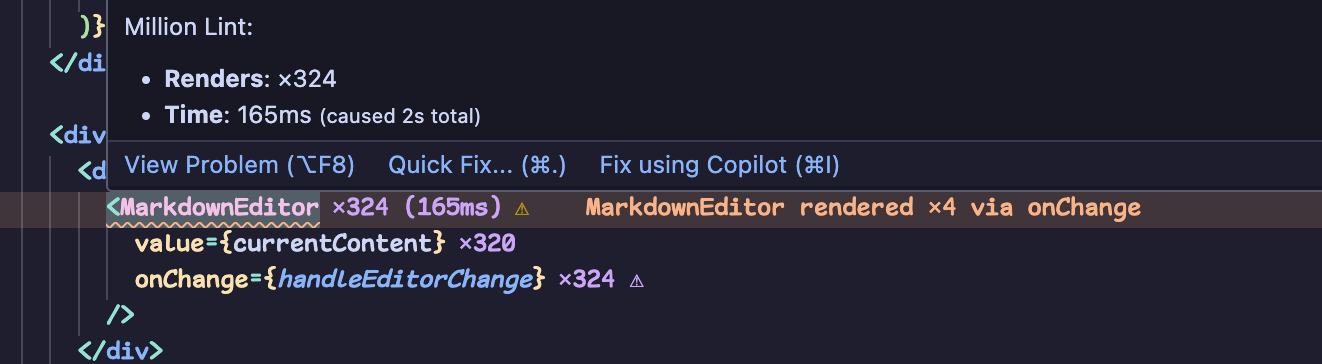

Another tool I've added to my toolbox is MillionJS. Millions lets me view which components are causing unnecessary rerenders and optimize my code.

At the end of this project, a new tool called react-scan was released as a standalone extension AND an update to MillionJS; making it even better. This is one of those tools you didn't know was missing from your workflow until you use it. Huge shoutout to Aiden for releasing this tool.

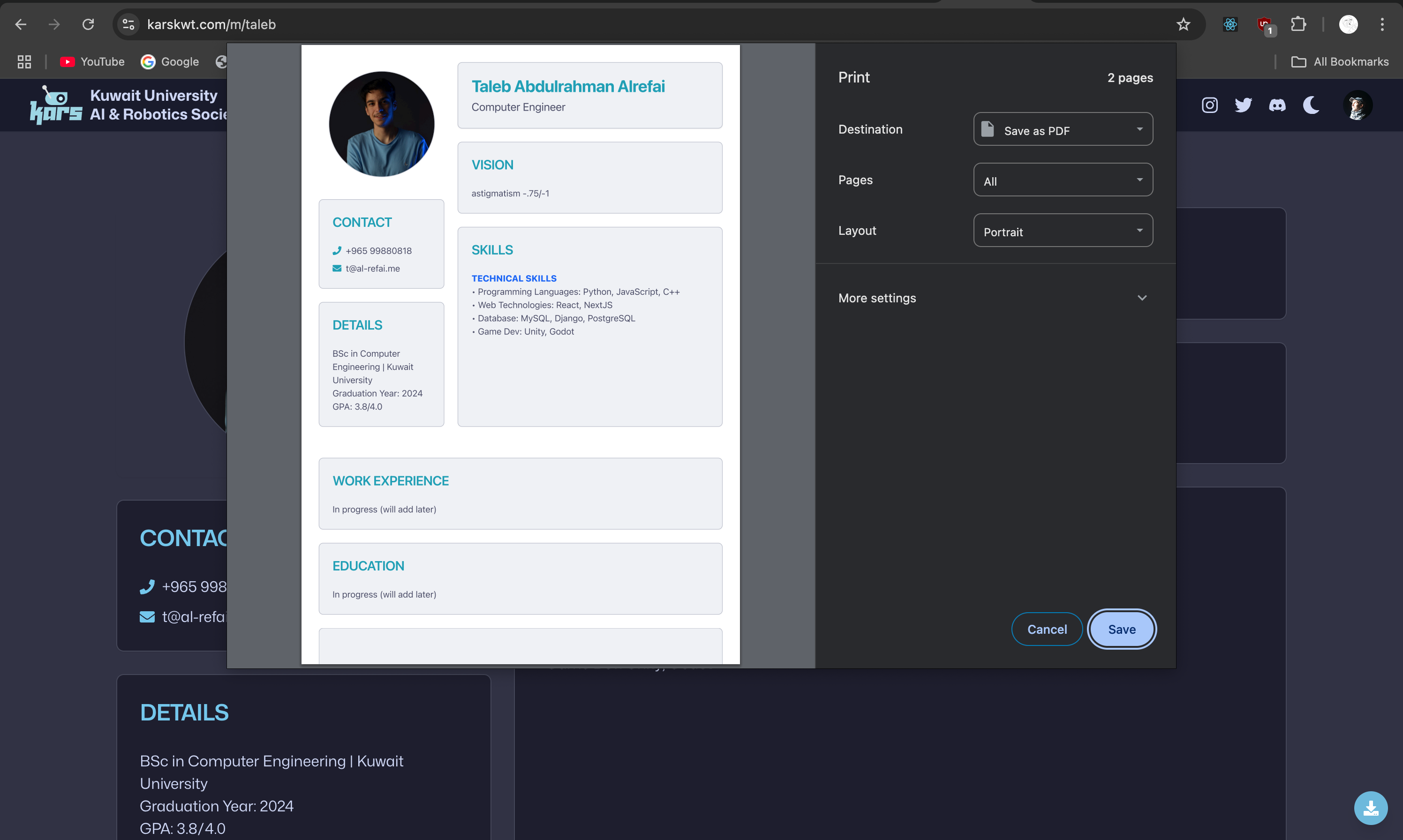

What makes our CV pages special?

The main goal and the biggest feature we've advertised for this website is the members page, where each member can have their own CV page. The best part of it is we allow you to fill your CV using a form OR using JSON. We provide the formula for the JSON form and a pre-written prompt, so you can drop your current PDF CV to any LLM and the prompt, and it will turn it into a JSON format compatible with our website. This makes transitioning your CV to the website easy, and now your data is in a searchable format available to all! You can also download your CV from the website as PDF, so now you have two methods of sharing and accessing your CV wherever you are.

Styling & theme

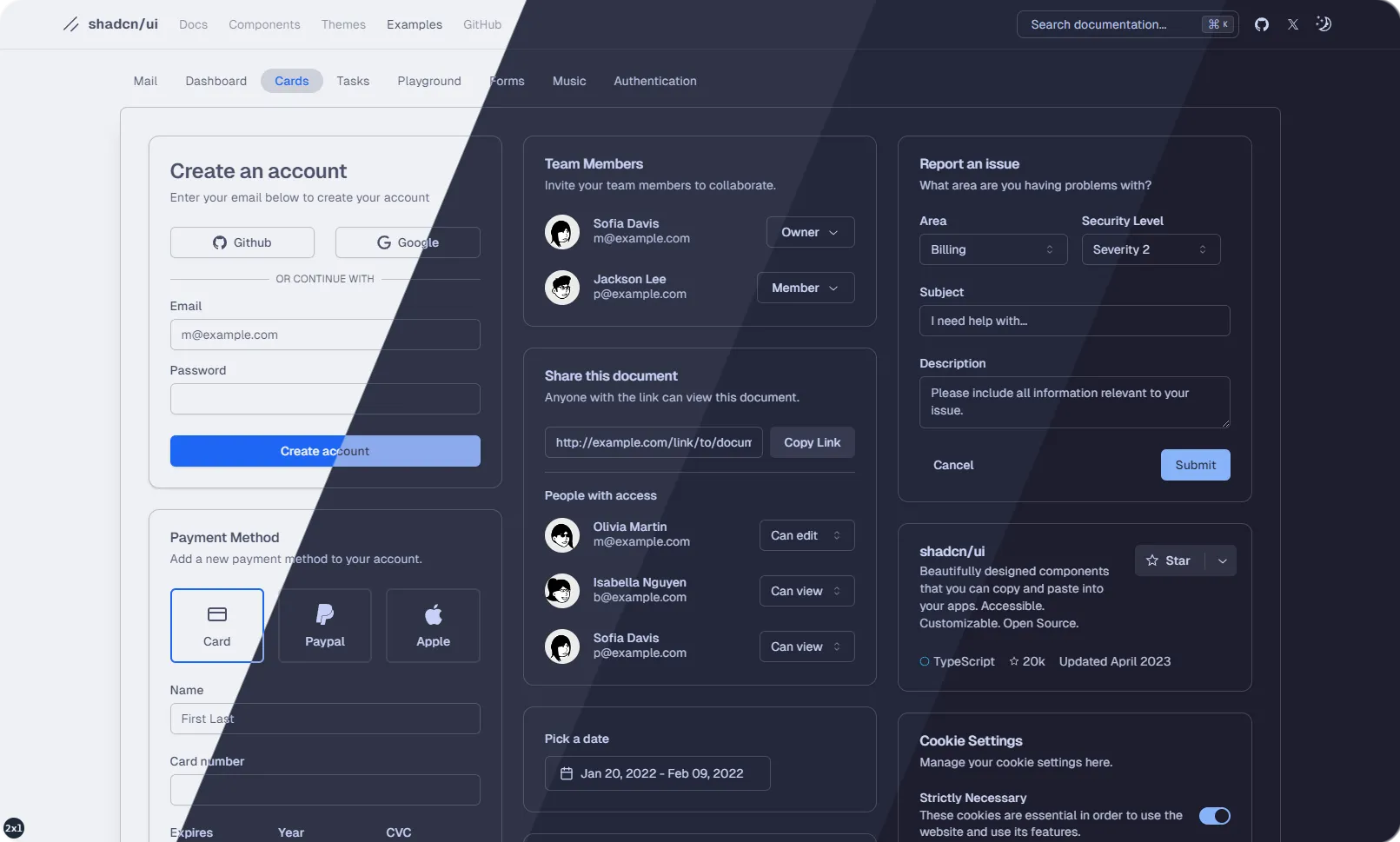

shadcn-ui

Like any sane react developer now, I'm using shadcn-ui to build all my components. Built on top of radix-ui, shadcn-ui gives you the raw component files and makes it super easy to customize it to your likings. With a growing community and v0 support (which I will talk about later), shadcn-ui has become my go-to default component library.

Catppuccin

Wherever I go, if it's an option, I use Catppuccin as my theme. My IDE, terminal, tmux, websites.. are all using mocha/latte for dark and light mode. This site is no exception, I found a tailwind Catppuccin plugin, a tailwind prose plugin, and a shadcn-ui styling pack for this theme. I also made a small page (karskwt.com/palette) to help me see the colors.

I can use any of the colors wherever I like and it will fit, I just love it so much.

Mona-Sans

The font used throughout the website is Mona Sans, a variable font made by github which I've grown to like.

Framer motion & hover.dev

A while ago while working on my graduation project, I stumbled across a youtuber by the name of Tom is loading who breaks down the process of creating framer-motion components. I also saw that he offered premium components in his site hover.dev. I went ahead and bought the pro license and now I frequently use his components in my projects. You can see them scattered, and HEAVILY modified to fit my needs, across the website.

Adding Framer-motion components gave so much character to the website, which everyone seems to love.

An example of this is the images on the right which grow as you scroll down the page.

Fun fact: new images are fetched every day using the pg_cron database-level cron job. It fetches 4 random landscape images from the database. Since we have the width and height of each image in the database, there is a dependent column which calculates the aspect ratio and determines if it's landscape or portrait.

Have you noticed that our robot mascot MAX (based on the Lucky 13 3D model moves his head around to follow your mouse? This is done using ThreeJS. I can't say I enjoyed working worth quaternions again, as it has been years since I actively worked on game development, but I believe the results are worth it :D

The blog page

As this is one of the most worked on features, I've decided to dedicate an entire section to it. Features of this blog are heavily inspired byJosh Comaeu, who has detailed everything about how he built his own blog.

His blog is a huge resource for, ironically, how to build blogs. An example of something we've integrated from him is the like button of this blog (which you should definitely click btw). It uses theuse-sound hook he shows in this blog.

What is MDX?

Before we delve into the specifics, let's talk about MDX - the technology powering this very blog post. MDX is a powerful combination of Markdown and JSX, allowing us to write content with the simplicity of Markdown while embedding interactive React components.

This allows us to do all kinds of things, such as embed javascript code right inside our blog; for example:

<Card>

<CardHeader> Check this out </CardHeader>

<CardContent>

<Button>Click me</Button>

</CardContent>

</Card>would yield the following:

Why do we need MDX?

Well, for starters, it looks so cool. Have you seen how the code blocks are highlighted in this blog? how the images are styled? embedded youtube videos? Wait look at this:

We have access to LATEX inside our blog! and this isn't even scratching the surface of the features we get with MDX.

When we build this blog, we had 2 goals in mind:

- Build a place where researchers can post their papers, and perhaps make them interactive.

- Build a place where we can post easy-to-follow tech related tutorials.

Imagine your paper talking about a project structure, with Mermaid charts you can easily illustrate them like this:

<Mermaid chart="

graph TD

A[Student] -->|Interested in AI/Robotics| B{Is a KU student?}

B -->|Yes| C[Apply to KARS]

B -->|No| D[Explore other options]

C -->|Application accepted| E[Join KARS]

C -->|Application rejected| F[Improve skills and reapply]

E -->|Participate in| G[KARS Events]

E -->|Contribute to| H[KARS Projects]

G --> I[Gain experience]

H --> I

I -->|Continuous learning| C

" />and the result would look like this:

Or perhaps you have a bar chart inside your paper?

What if your paper explained how some sorting algorithm worked, and you let the reader look at the code, see how it runs, and let them change it all within your blog? with CodeSandBox, you can!

Writing a tutorial and need to quickly showcase a codepen? Here you go:

I think I made a good system where you can make interactive and fun to read blogs, hopefully it catches on and we get Kuwait University academics to use it!! :)

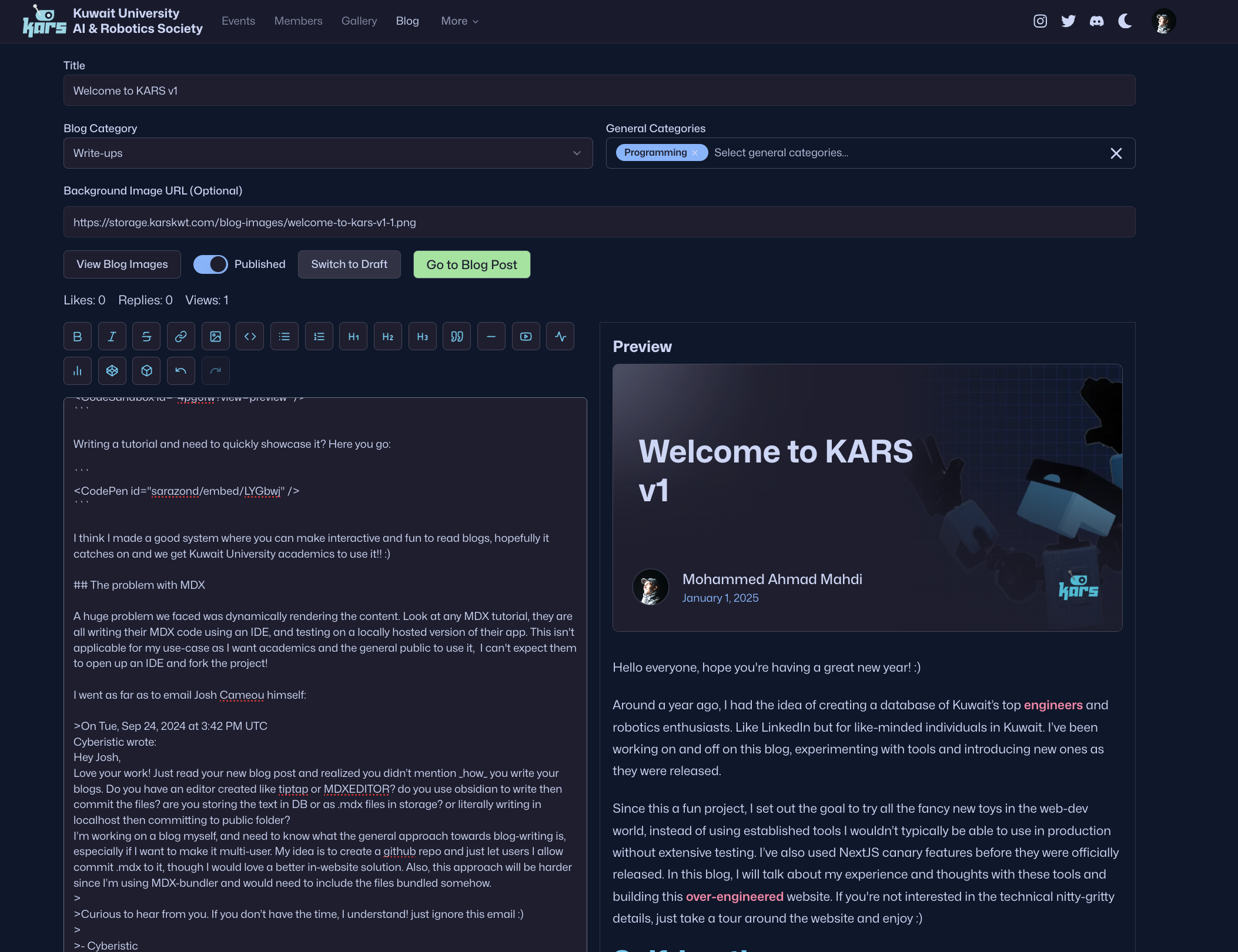

The problem with MDX

A huge problem we faced was dynamically rendering the content. Look at any MDX tutorial, they are all writing their MDX code using an IDE, and testing on a locally hosted version of their app. This isn't applicable for my use-case as I want academics and the general public to use it, I can't expect them to open up an IDE and fork the project!

I went as far as to email Josh Cameou himself:

On Tue, Sep 24, 2024 at 3:42 PM UTC

Cyberistic wrote:

Hey Josh,

Love your work! Just read your new blog post and realized you didn’t mention how you write your blogs. Do you have an editor created like tiptap or MDXEDITOR? do you use obsidian to write then commit the files? are you storing the text in DB or as .mdx files in storage? or literally writing in localhost then committing to public folder?

I’m working on a blog myself, and need to know what the general approach towards blog-writing is, especially if I want to make it multi-user. My idea is to create a github repo and just let users I allow commit .mdx to it, though I would love a better in-website solution. Also, this approach will be harder since I’m using MDX-bundler and would need to include the files bundled somehow.Curious to hear from you. If you don’t have the time, I understand! just ignore this email :)

- Cyberistic

and his response:

Hi there!

Right, I should probably mention this somewhere. I edit the MDX files in VS Code, preview on localhost, and commit + deploy to publish. I store all of the metadata (title, publish date) in frontmatter. Pretty much the most lo-fi solution possible, but it works great for me.

You mentioned multi-user: that does complicate things a bit. If all of the authors are developers, I think this approach can still work, but I wouldn't suggest it if you want non-developers to contribute content. In that case I'd suggest using a CMS like Sanity or Contentful, and trying to find some way to map special tags to custom MDX components. I know this is possible, but it seems like a lot of work to me.

I've added a little paragraph to the blog post explaining this. Thanks for reaching out!

—Josh

Man was he right! It is a lot of work to solve. This problem cost me days, and I'm still not sure if my implementation is correct as it feels too "hacky". I've created an editor page with preview, and used @mdx-js/mdx to compile the content on the run.

For reference, this is what it looks like right now as I'm writing this blog:

This approach works, but not without its setbacks.. For starters, I couldn't figure out how to pass hooks such as useState and useEffect to the compiler; I had to resort to creating components which take variables and set state internally. I also had to switch from mdx-bundler by Kent C. Dodds. It is an excellent tool which bundles mdx content quickly, but I couldn't figure out how to dynamically give it components! I'm still using it for pages like karskwt.com/guidelines but like other MDX tools out there, it isn't made for non-developers. All of them follow the same 'edit in IDE, commit to repo' workflow. Furthermore, I had to dynamically remove the keyword "import" at start of unescaped sentences unless if it was in a codeblock, or else my mdx would fail to compile. Right now I'm using next-mdx-remote/rsc, which is good enough for now.

I definitely want to visit this issue later, it seems like the ecosystem is missing a dynamic MDX library.

The state of AI tools

I want to end this blog with my thoughts on AI tools. When I started working on this, I was using Cursor as my editor to create pages (Yes.. I used Cursor before composer, and before it was cool :d ), and copilot. I quickly noticed that I developed a bad habit of IDLE-ing after each keystroke, waiting for the AI to complete my thoughts. A tool which was supposed to make me write faster made me slow down and let it write some mess, then I would have to go back and clean it up to my liking. I felt the same thing with Cursor, although it was saving me time in getting the page "scaffolding" running based on another page's template (check how /members and /event have the same header and search bar for example, with the only difference being the keywords "events" and "members"), it was still polluting the way I write code and my job turned from developing to cleaning messy code.

One of my favorite channels "Dreams of Code" has a great video on this where he highlighted the same experience:

So I went back to VS code, and my copilot education subscription was off. I found another tool called Supermaven AI where the free version has basic text completion. It doesn't write huge chunks of code but completes variable names and what not, and it's perfect! Only a month back did I switch copilot back on because I missed the "Quick fix" feature; sometimes I would paste code in and override brackets or other silly formatting issues, and it would fix it for me. Also, it's really good at inferring types for Typescript. Now, I try to make it a habit not to ever wait for copilot to suggest me something. I'm the machine master, it shall not have control over me (yet).

Another tool I began using for this project is v0. This tool is so helpful I started paying $20/m for it. For reference, this is my ONLY subscription ever! I don't subscribe to youtube premium, netflix, credit cards, or anything else at all. If i'm in need of a service, I subscribe for a month or two then go back to unsubscribing, but v0 is one of the rare exceptions here where I have been subscribed for months now. It uses the same shadcn-ui stack I use (because it's literally built by him), and makes it super easy to create components and iterate on them. For example, the fancy like button you see on the side (or in the bottom on mobile) took me around ~20 iterations to get right with v0. While I wouldn't use it to build full websites just yet (maybe one day), if you know what you're doing it could get you far! I consider myself a v0 power-user now, having some applications with over 150 iterations.

TL;DR: As of the beginning of 2025, I use AI as a means to code; not a replacement. Will that change in the future? maybe.

~ End

That's pretty much it!

Hope you enjoyed reading the blog and learned something from it. If you have any questions, I will be happy to answer them.

We're also looking for people who are inspired to write blogs. It could be anything from discoveries, write-ups, tutorials, experiences or whatever comes to your mind.

Also, leave a like?!?! thx

First blog completed, all thanks to Allah (SWT), the beneficial, the merciful. :)